- 1. General Overview

- 2. Introducing the Subscriber Manager

- 3. Installation and Upgrading

- Installation Overview

- Contents of Distribution Files

- Documentation

- System Requirements

- Installation Procedures

- Typical Installation

- Verifying that the Installation was Successful

- Configuring the Subscriber Manager

- Additional Installation Procedures

- Troubleshooting the Installation

- Installing an Application

- System Changes Made by Installation Scripts

- Upgrading

- Uninstalling

- 4. Configuration and Management

- SM Management and Configuration Methods

- Configuring a Subscriber Management Solution

- Using the CLU

- Informative Output

- Parsing CLU Operations and Options

- Reloading the SM Configuration (p3sm)

- Managing the SM (p3sm)

- Managing Subscribers, Mappings, and Properties (p3subs)

- Managing the Subscriber Database (p3subsdb)

- Viewing and Connecting Network Elements (p3net)

- Viewing Subscriber Domains (p3domains)

- Managing the Cable Support Module (p3cable)

- Installing an Application (p3inst)

- Viewing Information of the PRPC Interface Server (p3rpc)

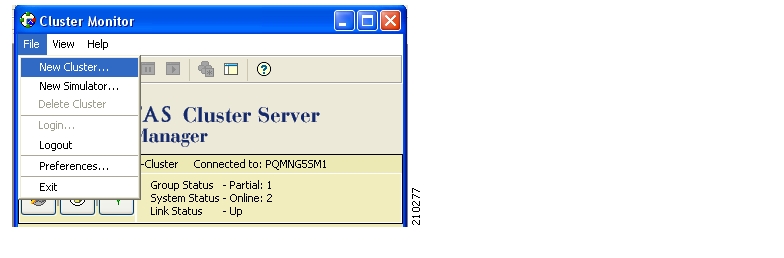

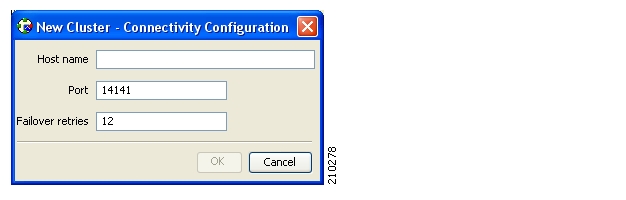

- Managing a Cluster of Two SM Nodes (p3cluster)

- Managing the User Log (p3log)

- Viewing Statistics of the RADIUS Listener (p3radius)

- Utilities

- A. Configuration File Options

- Introduction

- Description of the Configuration File Options

- SM General Section

- SM High Availability Setup Section

- Subscriber State Persistency Section

- SM-LEG Failure Handling Section

- LEG-Domains Association Section

- Domain.XXX Section

- Default Domains Configuration Section

- Auto Logout Section

- Inactive Subscriber Removal Section

- Radius Listener Section

- Radius.NAS.XXX Section

- Radius.Property.Package Section

- Radius.Subscriber ID Section

- RPC.Server Section

- MPLS-VPN Section

- SCE.XXX Section

- FTP Section

- HTTP Tech-IF Section

- RDR Server Section

- Cable Adapter Section

- Data Repository Section

- B. Command-Line Utilities

- C. CPE as Subscriber in Cable Environment

- D. Troubleshooting

- Using the Troubleshooting Chapter

- General Errors

- General Setup Errors

- TimesTen Database Setup Errors

- Introduction

- TimesTen DSN Configuration—Cannot Find Requested DSN

- TimesTen DSN Configuration—Data Source Name Not Found

- TimesTen Database Settings—Cannot Connect to Data Source

- TimesTen Configuration Error—Not Enough Memory

- TimesTen Configuration Error—Incorrect Memory Definitions

- TimesTen Configuration Error—Cannot Create Semaphores

- TimesTen Configuration Error—Cannot Read Data Store File

- TimesTen Configuration Error—Data Store Space Exhausted

- Network Management Command Line Utility (p3net) Errors

- Subscriber Database Command Line Utility (p3subsdb) Errors

- Cable Support Command Line Utility (p3cable) Errors

- Configuration Errors

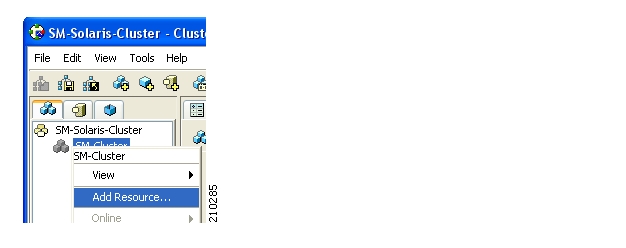

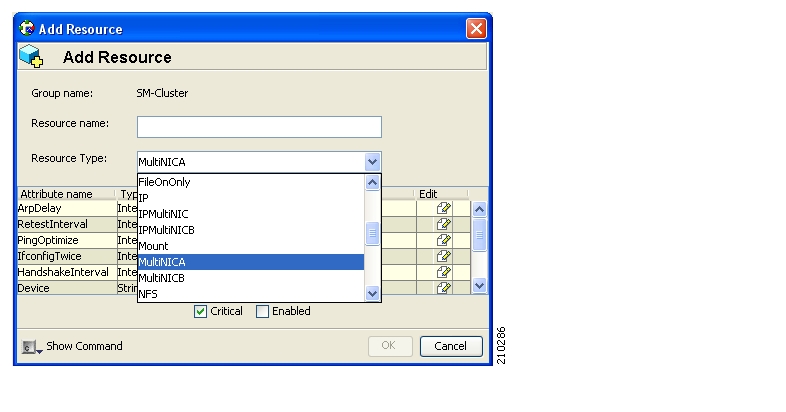

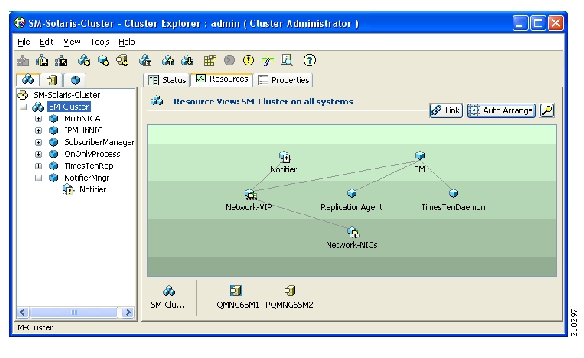

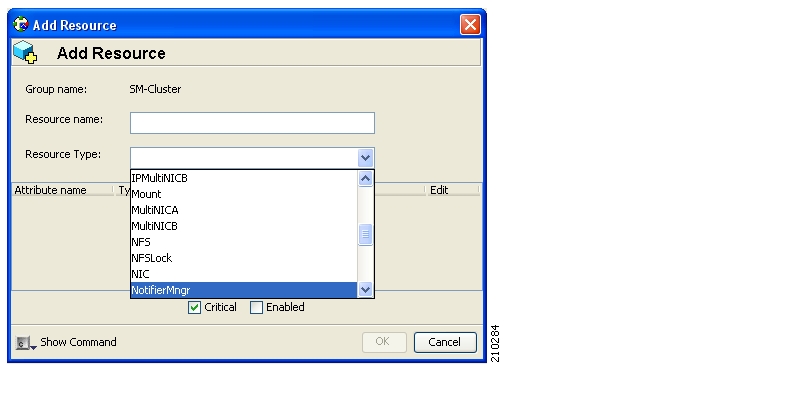

- E. Veritas Cluster Server Requirements and Configuration

This chapter provides a general overview of the Cisco Service Control solution. It introduces the Cisco Service Control concept and the Service Control capabilities. It also briefly describes the hardware capabilities of the Service Control Engine (SCE) platform and the Cisco specific applications that together compose the total Cisco Service Control solution.

The Cisco Service Control solution is delivered through a combination of purpose-built hardware and specific software solutions that address various service control challenges faced by service providers. The SCE platform is designed to support classification, analysis, and control of Internet/IP traffic.

Service Control enables service providers to create profitable new revenue streams while capitalizing on their existing infrastructure. With the power of Service Control, service providers have the ability to analyze, charge for, and control IP network traffic at multigigabit wire line speeds. The Cisco Service Control solution also gives service providers the tools they need to identify and target high-margin content-based services and to enable their delivery.

As the downturn in the telecommunications industry has shown, IP service providers’ business models need to be reworked to make them profitable. Having spent billions of dollars to build ever larger data links, providers have incurred massive debts and faced rising costs. At the same time, access and bandwidth have become commodities where prices continually fall and profits disappear. Service providers have realized that they must offer value-added services to derive more revenue from the traffic and services running on their networks. However, capturing real profits from IP services requires more than simply running those services over data links; it requires detailed monitoring and precise, real-time control and awareness of services as they are delivered. Cisco provides Service Control solutions that allow the service provider to bridge this gap.

Service providers of any access technology (DSL, cable, mobile, and so on) targeting residential and business consumers must find new ways to get maximum leverage from their existing infrastructure, while differentiating their offerings with enhanced IP services.

The Cisco Service Control Application for Broadband adds a new layer of service intelligence and control to existing networks that can:

Report and analyze network traffic at subscriber and aggregate level for capacity planning

Provide customer-intuitive tiered application services and guarantee application SLAs

Implement different service levels for different types of customers, content, or applications

Identify network abusers who are violating the Acceptable Use Policy

Identify and manage peer-to-peer, NNTP (news) traffic, and spam abusers

Enforce the Acceptable Use Policy (AUP)

Integrate Service Control solutions easily with existing network elements and BSS/OSS systems

The core of the Cisco Service Control solution is the purpose-built network hardware device: the Service Control Engine (SCE). The core capabilities of the SCE platform, which support a wide range of applications for delivering Service Control solutions, include:

Subscriber and application awareness—Application-level drilling into IP traffic for real-time understanding and controlling of usage and content at the granularity of a specific subscriber.

Subscriber awareness—The ability to map between IP flows and a specific subscriber in order to maintain the state of each subscriber transmitting traffic through the SCE platform and to enforce the appropriate policy on this subscriber’s traffic.

Subscriber awareness is achieved either through dedicated integrations with subscriber management repositories, such as a DHCP or a RADIUS server, or via sniffing of RADIUS or DHCP traffic.

Application awareness—The ability to understand and analyze traffic up to the application protocol layer (Layer 7).

For application protocols implemented using bundled flows (such as FTP, which is implemented using Control and Data flows), the SCE platform understands the bundling connection between the flows and treats them accordingly.

Application-layer, stateful, real-time traffic control—The ability to perform advanced control functions, including granular BW metering and shaping, quota management, and redirection, using application-layer stateful real-time traffic transaction processing. This requires highly adaptive protocol and application-level intelligence.

Programmability—The ability to quickly add new protocols and easily adapt to new services and applications in the ever-changing service provider environment. Programmability is achieved using the Cisco Service Modeling Language (SML).

Programmability allows new services to be deployed quickly and provides an easy upgrade path for network, application, or service growth.

Robust and flexible back-office integration—The ability to integrate with existing third-party systems at the Service Provider, including provisioning systems, subscriber repositories, billing systems, and OSS systems. The SCE provides a set of open and well-documented APIs that allows a quick and robust integration process.

Scalable high-performance service engines—The ability to perform all these operations at wire speed.

The SCE family of programmable network devices is capable of performing application-layer stateful-flow inspection of IP traffic, and controlling that traffic based on configurable rules. The SCE platform is a purpose-built network device that uses ASIC components and RISC processors to go beyond packet counting and delve deeper into the contents of network traffic. Providing programmable, stateful inspection of bidirectional traffic flows and mapping these flows with user ownership, the SCE platforms provide real-time classification of network usage. This information provides the basis of the SCE platform advanced traffic-control and bandwidth-shaping functionality. Where most bandwidth shaper functionality ends, the SCE platform provides more control and shaping options, including:

Layer 7 stateful wire-speed packet inspection and classification

Robust support for over 600 protocols and applications, including:

General—HTTP, HTTPS, FTP, TELNET, NNTP, SMTP, POP3, IMAP, WAP, and others

P2P file sharing—FastTrack-KazaA, Gnutella, BitTorrent, Winny, Hotline, eDonkey, DirectConnect, Piolet, and others

P2P VoIP—Skype, Skinny, DingoTel, and others

Streaming and Multimedia—RTSP, SIP, HTTP streaming, RTP/RTCP, and others

Programmable system core for flexible reporting and bandwidth control

Transparent network and BSS/OSS integration into existing networks

Subscriber awareness that relates traffic and usage to specific customers

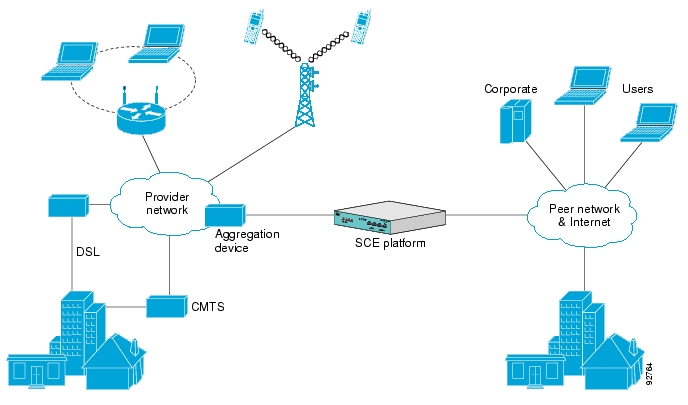

The following diagram illustrates a common deployment of an SCE platform in a network.

Service configuration management is the ability to configure the general service definitions of a service control application. A service configuration file containing settings for traffic classification, accounting and reporting, and control is created and applied to an SCE platform. The SCA BB application provides tools to automate the distribution of these configuration files to SCE platforms. This simple, standards-based approach makes it easy to manage multiple devices in a large network.

Service Control provides an easy-to-use GUI to edit and create these files and a complete set of APIs to automate their creation.

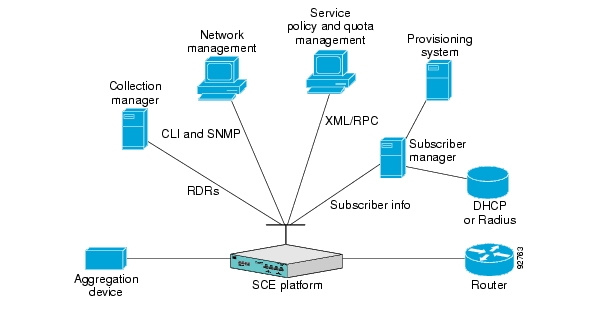

The Cisco Service Control solution includes a complete management infrastructure that provides the following management components to manage all aspects of the solution:

Network management

Subscriber management

Service Control management

These management interfaces are designed to comply with common management standards and to integrate easily with existing OSS infrastructure.

Cisco provides complete network FCAPS (Fault, Configuration, Accounting, Performance, Security) Management.

Two interfaces are provided for network management:

Command-Line Interface (CLI)—Accessible through the Console port or through a Telnet or SSH connection, the CLI is used for configuration and security functions.

SNMP—Provides fault management (via SNMP traps) and performance monitoring functionality.

Where the SCA BB enforces different policies on different subscribers and to track usage on an individual subscriber basis, the Cisco Service Control Management Suite (SCMS) Subscriber Manager (SM) may be used as middleware software for bridging between the OSS and the SCE platforms. Subscriber information is stored in the SM database and can be distributed between multiple platforms according to actual subscriber placement.

The SM provides subscriber awareness by mapping network IDs to subscriber IDs. It can obtain subscriber information using dedicated integration modules that integrate with AAA devices, such as RADIUS or DHCP servers.

Subscriber information may be obtained in one of two ways:

Push Mode—The SM pushes subscriber information to the SCE platform automatically upon logon of a subscriber.

Pull Mode—The SM sends subscriber information to the SCE platform in response to a query from the SCE platform.

The Cisco Service Control solution generates usage data and statistics from the SCE platform and forwards them as Raw Data Records (RDRs), using a simple TCP-based protocol (RDR-Protocol). The Cisco Service Control Management Suite (SCMS) Collection Manager (CM) software implements the collection system, listening in on RDRs from one or more SCE platforms and processing them on the local machine. The data is then stored for analysis and reporting functions, and for the collection and presentation of data to additional OSS systems such as billing.

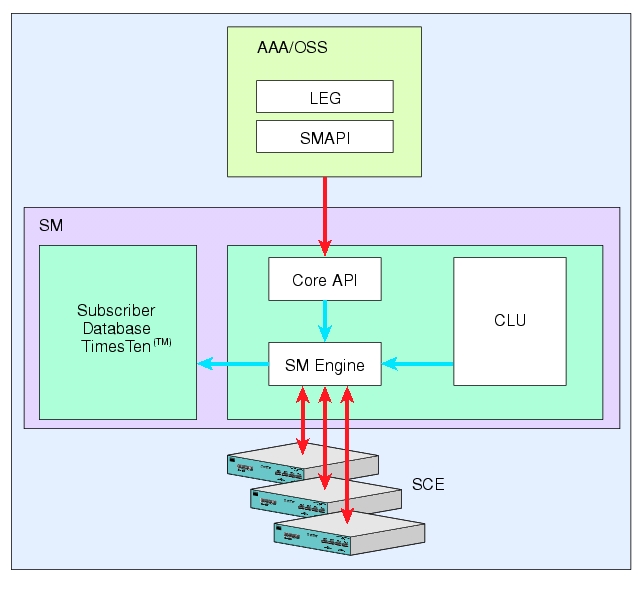

The Subscriber Manager (SM) is a middleware software component that supplies subscriber information for multiple SCE platforms in deployments where dynamic subscriber awareness is required. It does this in one of two ways:

By pre-storing the subscriber information

By serving as a stateful bridge between an AAA system or a provisioning system and the SCE platforms

The SCE platforms use subscriber information to provide subscriber-aware functionality, per-subscriber reporting, and policy enforcement.

Some Cisco Service Control solutions can also operate without subscriber awareness:

Subscriber-less—Control- and link-level analysis functions are provided at a global device resolution.

Anonymous subscriber—The system dynamically creates “anonymous” subscribers per IP address. User-defined IP address ranges may then be used to differentiate between anonymous subscribers policies.

Static subscriber awareness—Subscriber awareness is required, but allocation of network IDs (mainly IP addresses) to subscribers is static.

In these three modes, the SCE platform handles all subscriber-related functionality and an SM module is not required.

Note

Starting with SM version 2.2, you can configure the SM to operate either with or without a cluster of two SM nodes. The added functionality when operating in a cluster topology provides powerful new features such as fail-over and high availability. (For definitions of italicized terms, see the Glossary of Terms.) The information in most of this chapter is applicable whether using a cluster or not. However, for clarity, information that is applicable only when using a cluster is presented separately at the end of this chapter, in the section Subscriber Manager Fail-Over.

A subscriber is defined as a managed entity on the subscriber side of the SCE platform, to which accounting and policy are applied individually. The subscriber side of the SCE platform is the side of the SCE platform that points to the access or downstream part of the topology, as opposed to the network side, which points to the core of the network.

The SM addresses the following issues in allowing dynamic subscriber awareness:

Mapping—The SCE platform encounters flows with network IDs (IP addresses) that change dynamically, and it requires dynamic mapping between those network IDs and the subscriber IDs. The SM database contains the network IDs that map to the subscriber IDs. This is the main functionality of the SM.

Policy—The SM serves as a repository of policy information per subscriber. The policy information may be preconfigured to the SM, or dynamically provisioned when the mapping information is provided.

Capacity—The SCE platform or platforms may need to handle (over time) more subscribers than they can concurrently hold. In this case, the SM serves as an external repository for subscriber information, while the SCE platform is introduced only with the online or active subscribers.

Location—The SM supports the functionality of sending subscriber information only to the relevant SCE platforms, in case such functionality is required. This is implemented using the domains mechanism or the Pull mode (see Pull Mode).

The SM database (see SM Database) can function in one of two ways:

As the only source for subscriber information when the SM works in standalone mode

As a subscriber information cache when the SM serves as a bridge between a group of SCE devices and the customer Authentication, Authorization, and Accounting (AAA) and Operational Support Systems (OSS).

The following figure shows the flow of subscriber information through the SM.

The flow takes place as follows:

Subscriber information enters the SM in one of two ways:

Automatically upon the subscriber going online—A Login Event Generator (LEG) software module that integrates with the customer AAA system (such as DHCP Server, RADIUS, or Network Access System (NAS)) identifies a subscriber login event, and sends it to the SM by using the SM API.

Manual setup—Subscriber information is imported into the SM from a file or by using the Command-Line Utilities (CLU).

Automatic and manual modes can be combined. For example, all subscribers may be loaded to the SM via manual setup, and a subset of the subscriber record (domain, network ID, and so on) changed automatically through the SM API.

In automatic mode, the SCMS SM Java or C/C++ APIs are used for delegating subscriber information to the SM (see the Cisco SCMS SM Java API Programming Guide or the Cisco SCMS SM C/C++ API Programming Guide).

The SM Engine:

Stores subscribers in the subscriber database

Introduces subscriber information to SCE Platforms

The information may be passed automatically to the SCE platform, or it may reside in the SM database until requested by the SCE platform.

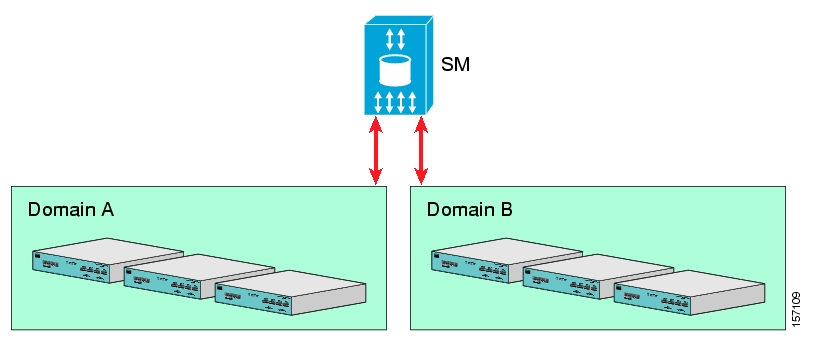

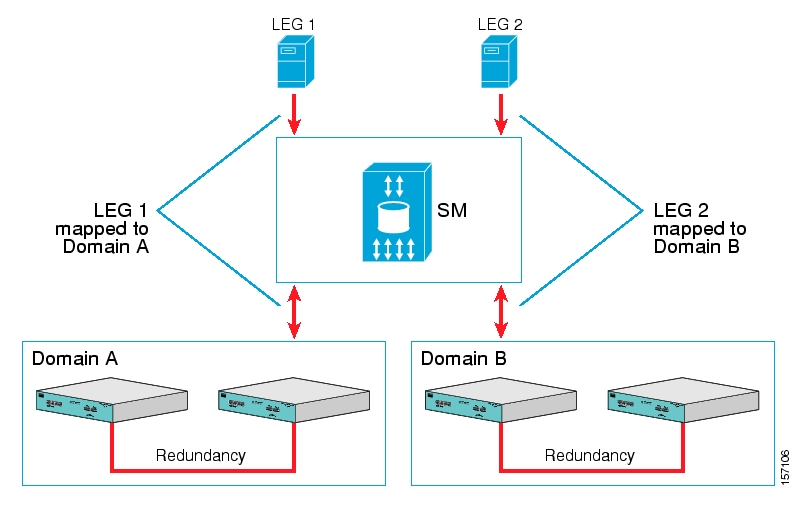

The SM may be configured with more than one SCE platform. These SCE platforms may be grouped into domains. Each domain represents a group of SCE platforms that serve the same group of subscribers.

The subscribers of the service provider may be divided into the following logical types (at any given moment):

Offline subscriber—A subscriber that currently does not have any IP address and as such does not generate any IP traffic. Such subscribers are not stored in the SCE platform.

Online subscriber—A subscriber that is currently online.

At any particular time, a certain number of online subscribers will be idle, that is, connected to the service provider but not generating any IP traffic.

Active subscriber—An online subscriber that is actually generating IP traffic (such as by browsing the Internet or downloading a file).

In addition, the total number of subscribers is all the subscribers whose IP traffic might be traversing through the SCE platforms in a specific deployment.

There are four general scenarios for a network system using the SCE platforms:

Total number of subscribers can be statically stored in a single SCE platform.

This is the simplest, most reliable scenario. It may not require the use of the SM.

Total number of subscribers exceeds the capacity of the SCE platform, but the number of online subscribers predicted at any time can be statically stored in the SCE platform.

It is recommended to use the SM in Push mode. See Push Mode.

Number of online subscribers exceeds the capacity of the SCE platform, but the number of active subscribers predicted at any one time can be statically stored in the SCE platform.

The SM must be used in Pull mode. See Pull Mode.

Number of active subscribers predicted at any one time exceeds the capacity of the SCE platform.

Multiple SCE devices must be installed to divide the subscribers among the SCE platforms. If the system is divided into domains (see Domains), so that the SM knows in advance to which SCE platform a particular subscriber should be sent, Push mode may be used. Otherwise, Pull mode is required.

For specific scenarios using the SM with multiple servers and/or SCE platforms, see System Configuration Examples.

The SM uses a commercial relational database from TimesTen, optimized for high performance and with a background persistency scheme. The In-Memory Database efficiently stores and retrieves subscriber records.

A subscriber record stored in the SM Database (SM-DB) consists of the following components:

Subscriber name (key)—A string identifying the subscriber in the SM. Maximum length: 40 characters. This can be case-sensitive or case-insensitive depending on the configuration file. By default, the database is case-sensitive. If the database is case-insensitive, the SM will convert the name to lower case when updating or querying the database.

Domain (secondary key)—A string that specifies which group of SCE devices handles this subscriber.

Subscriber network IDs (mappings)—A list of network identifiers, such as IP addresses or VLANs. The SCE uses these identifiers to associate network traffic with subscriber records.

Subscriber policy—A list of properties that instruct the SCE what to do with the network traffic of this subscriber. The content of this list is application specific.

Subscriber state (for example, quota used)—A field that encodes the subscriber state, recorded by the last SCE, to handle the network traffic of this subscriber.

You can access the subscribers using one of two indexes:

Subscriber name

Subscriber name + domain

Note that in cluster redundancy topology, the active machine database replicates the subscriber data to the standby machine database. For additional information, see the Subscriber Manager (SM) Fail-Over section.

The Subscriber ID is a string representing a subscriber that is a unique identifier for each subscriber from the customer perspective. For example, it may represent a subscriber name or a CM MAC address. This section lists the formatting rules of a subscriber ID.

It may contain up to 64 characters. The following characters may be used:

|

Alphanumerics |

$ (dollar sign) |

. (period or dot) |

_ (underscore) |

|

- (minus sign or hyphen) |

% (percent sign) |

/ (slash) |

~ (tilde) |

|

! (exclamation mark) |

& (ampersand) |

: (colon) |

' (apostrophe) |

|

# (number sign) |

() (parentheses) |

@ (at sign) |

|

For example:

String subID1="john";

String subID2="john@yahoo.com";

String subID3="00-B0-D0-86-BB-F7";Use the SM API for:

Altering the fields of an already existing subscriber record

Setting up new subscribers in the SM

Performing queries

The SM API is provided in C, C++, and Java. It serves as the bottom-most layer of every LEG.

SM API programmer references are provided in the Cisco SCMS SM C/C++ API and the Cisco SCMS SM Java API Programmer Guides.

The SM Login Event Generators (LEGs) are software components that use the SM API to generate subscriber-record update messages (such as login/logout) and send them to the SM. LEGs are usually installed with AAA/OSS platforms, or with provisioning systems. They translate events generated by these systems to Cisco Service Control subscriber update events.

The unique functionality of each LEG depends on the specific software package with which it interacts. For example, RADIUS LEGs, DHCP LEGs, or some provisioning third party system LEGs may be implemented. LEGs can set up subscribers or alter any of the fields of an existent subscriber record.

You can connect multiple LEGs to a single SM. Conversely, a single LEG can generate events for multiple domains.

As illustrated in the figure in Flow of Subscriber Information, the SM introduces subscriber data to the SCE platforms. This operation functions in one of two modes:

Push—This is the simpler and recommended mode.

Pull—Use this mode only in special cases, as explained below.

Push or Pull mode is configured for the entire SM system.

For information detailing the configuration of the subscriber integration modes, see the SM General Section.

In Push mode, immediately after adding or changing a subscriber record, the SM distributes, or pushes, this information to the relevant SCE platforms, as determined by the subscriber domain. When the subscriber starts producing traffic through the SCE platform, it is ready with the required subscriber information.

In some scenarios, factors such as capacity limitations make it impossible to use Push mode.

Note

Use Push mode only if all online subscribers associated with a domain can be loaded simultaneously into all the SCE platforms in the domain.

In Pull mode, the SCE platforms are not notified in advance of subscriber information. When an SCE platform cannot associate the IP traffic with a subscriber, it will request, or pull, the information from the SM.

The advantage of Pull mode is that there is no need to know in advance which SCE platform serves which subscriber.

The disadvantages of Pull mode are:

Increased communication in the SM-SCE link

Increased load on the SM, as it processes incoming requests from both the SCE device and the LEG.

Note

By default, the SCE does not request subscriber information from the SM. You must configure anonymous groups in the SCE for the set of IP ranges that should be requested from the SM. See the SCE User Guide for more details on anonymous subscriber groups.

Note

Pull mode must be used when all online subscribers associated with a domain exceed the capacity of the SCE platforms in the domain (but the number of active subscribers can still be loaded into the SCE platforms in the domain).

The following table summarizes the differences between the Push mode and Pull mode:

Table 2.1. Differences Between Push Mode and Pull Mode

|

Aspect of Use |

Push Mode |

Pull Mode |

|---|---|---|

|

When to use |

For simple provisioning of subscriber information to the SCE platform |

For real-time, on-demand subscriber information retrieval Used in large scale deployments:

|

|

Functional flow at access time |

|

|

|

Subscriber information at the SCE platform |

SCE platform always has current subscriber information:

|

SCE gets subscriber information on demand |

The SM provides the option of partitioning SCE platforms and subscribers into subscriber domains.

The motivation for the domains concept is for enabling a single SM to handle several separate network sections, and for better control of subscriber introduction to the SCEs.

A subscriber domain is a group of SCE platforms that share a group of subscribers. The subscriber traffic can pass through any SCE platform in the domain. A subscriber can belong to only a single domain. Usually a single SCE platform serves a subscriber at any given time.

Domains are managed differently in the Push and Pull modes:

In Push mode, all the subscribers in a subscriber domain are sent to all SCEs in the domain. The main reason for the number of SCE platforms in a single domain is redundancy.

In Pull mode, the pull requests are handled only for subscribers in the domain of the pulling SCE platform. In Pull mode, usually a single domain covers all the subscribers.

The system is configured with one default subscriber domain called “subscribers”. When adding an SCE platform to the SM, it is automatically added to this default domain, unless otherwise specified. Subscribers are also associated with this default subscriber domain, unless otherwise specified. To associate a subscriber with a different domain, first define this domain in the configuration file, and then explicitly specify it when adding the subscriber to the SM. To associate an SCE platform with a non-default subscriber domain, edit and reload the configuration file. For more information, see Chapter 4, Configuration and Management.

A communication failure may occur either on the LEG-SM communication link or on the SMSCE communication link. A communication failure may occur due to a network failure or because the SCE, SM, or LEG has failed. High availability and recovery from an SM failure are discussed in SM Cluster.

When configuring the system, you should consider three issues related to communication failures:

Communication failure detection—A timeout after which a communication failure is announced

Communication failure handling—The action to be taken when communication on the link fails

Communication failure recovery—The action to be taken when communication on the link resumes

Either one of two mechanisms detects a communication failure:

Monitoring the TCP socket connection state. All peers do the monitoring.

Using a keep-alive mechanism at the PRPC protocol level

There are two configuration options for handling communication failures:

Ignore communication failures

Erase the subscriber mappings in its database and start handling flows without subscriber awareness

Erasing the mappings in the database is useful when you want to avoid incorrect mappings of subscribers to IP addresses. This configuration is implemented by requesting to clear all mappings upon failure.

The SM supports high availability using the Veritas Cluster Server (VCS) technology. In a high availability topology, the SM software runs on two machines, designated as the active machine and the standby machine. Subscriber data is continuously replicated from the active to the standby machine, ensuring there is minimal data loss in case of active SM failure. When the active machine fails, the standby machine discovers the failure and becomes active. For additional information, see the Subscriber Manager Fail-Over section.

SM management includes configuration, fault management, logging management, and performance management.

Configure the SM using the following:

Configuration file (

p3sm.cfg)—For setting all configuration parameters of the Subscriber Manager.

Note

Changes that you make in the configuration file take effect only when you load the configuration file using the Command-Line Utilities (CLU) or when you restart the SM.

For a detailed description of this file, see Appendix A, Configuration File Options.

Command-Line Utilities (CLU)—For ongoing subscriber management and monitoring of the SM. CLU commands are shell tools that you can use to manage subscribers, install or update applications, retrieve the user log, and load the configuration file when updated.

For a complete description of the Command Line Utilities, see Appendix B, Command-Line Utilities.

The CLU can be invoked locally, through a Telnet (or SSH) session to the SM hosting platform.

Use the SM user log files for logging, fault, and performance management. The log file contains information regarding system events, failures, and periodic system performance reports.

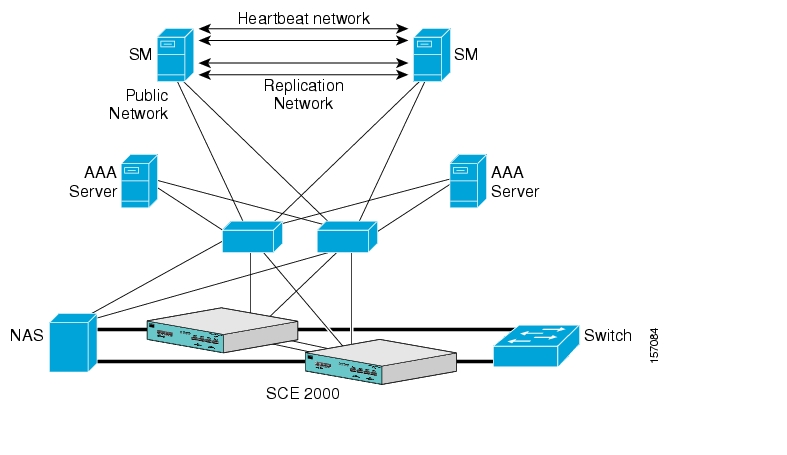

You can configure the SM to operate with or without a cluster. The added functionality when operating in a cluster topology provides powerful new features such as fail-over and high availability. (For definitions of italicized terms, see the Glossary of Terms.)

This section describes topics that are related to using the Subscriber Manager together with clusters and redundancy.

As the Subscriber Manager plays a critical role in the Cisco SCA BB solution that is deployed in tier-one service provider environments, it also supports, starting with SM version 2.2, a fail-over operational mode. This feature minimizes system downtime that is caused by SM failure (as further discussed in Overview).

This section introduces various concepts that are related to using a cluster of two SM nodes in a fail-over operational mode.

Note

For the purposes of this section, it is assumed that the reader is familiar with the Veritas Cluster technology.

The fail-over scheme that is implemented in the SM is based on the Veritas cluster technology. The cluster includes two machines, each of them running SM TimesTen and Veritas software. The Veritas Cluster Server (VCS) software consolidates the SMs and exposes a single entity by providing a single virtual IP address for the entire cluster.

The cluster software distinguishes an active and a standby machine: the active machine “owns” the virtual IP address and all network connections, while the standby machine is passive until a fail-over occurs. At fail-over, the IP address is passed from the failing server to the backup server, which becomes activated and re-establishes all network connections.

When a fail-over occurs, the LEGs lose their connection with the failed SM, and reconnect to the activated (backup) SM and retransmit their uncommitted messages. The activated SM connects to the SCE platforms and performs an SCE resynchronization.

The TimesTen database replication agent constantly replicates the SM database from the active node to the standby node. This enables a fast fail-over from one SM to another, since the subscriber data in the activated machine is always valid. The two SM nodes do not communicate except for passing the subscriber data.

The VCS uses software components called “cluster agents” to monitor and control the state of resources such as Network Interface Cards (NICs), disks, IP addresses, and processes. Cisco supplies cluster agents to monitor the SM and the TimesTen database daemon and replication agent.

As part of the cluster operation, the TimesTen database daemon and replication agents are up and running regardless of the fail-over state. The SM Veritas agent monitors the daemon and the replication agent process. In case one of them fails, a fail-over takes place.

Note

The SM software configuration on both the active and the standby machines must be identical. Apply the same configuration file and the same application PQI module to both machines.

The following sections describe these concepts in further detail.

The two SM nodes operate in hot-standby mode, where at any given time one node (the active node) receives and processes all the SM events, while the other node (the standby node) waits and is ready to go into operation on fail-over. For enabling seamless fail-over and for minimizing the fail-over time, the two SM nodes operate without an external storage device.

During the normal operation of the cluster, the active node (selected by the cluster):

Performs all SM functionality of a non-cluster environment

Provides “health” information for the cluster agent

Periodically replicates its subscriber database to the standby node

On the standby node, both the SM and the TimesTen software are running:

The SM is fully configured. (It is applied with the same configuration file and PQI application module as the active node, but does not interfere with the active node’s work.)

The SM connects to the TimesTen database, but does not connect to the LEG and the SCE devices.

The TimesTen software is operating as a replication client for the subscriber database, receiving and applying updates from the active node’s TimesTen software.

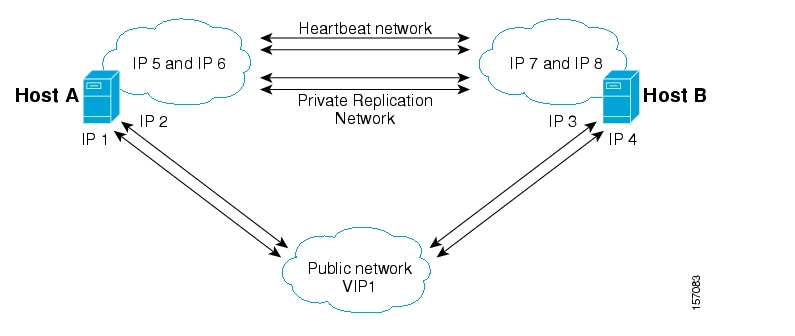

The following figure depicts an SM cluster configuration in a topology with a redundant AAA server and two SCE 2000 platforms that are cascaded for redundancy.

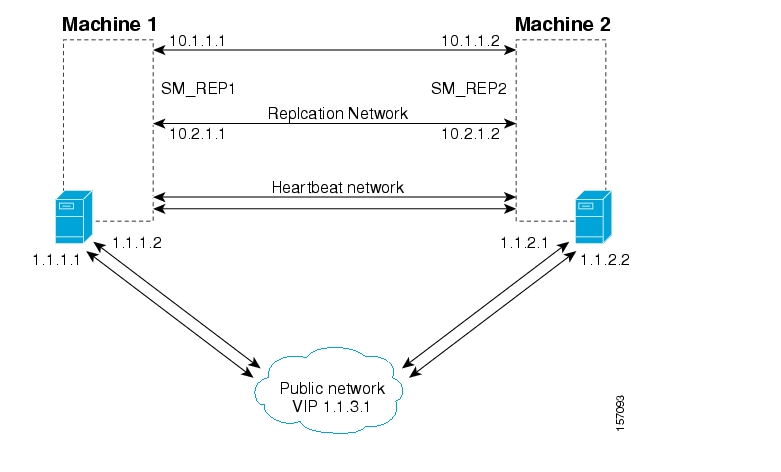

As already mentioned, an SM fail-over topology includes two SM nodes connected in a cluster scheme.

Two dedicated (private) redundant networks interconnect the two nodes:

Heartbeat network—Used by the Veritas Cluster Server to perform cluster monitoring and control.

Replication network—Used by the replication process to pass the subscriber records.

The two nodes should be located in the same site, where the heartbeat network is implemented using back-to-back connectivity between the two nodes or via redundant switches. Each node in the cluster has redundant network paths (NICs) connecting it to all of the external entities with which the SM communicates (AAA, LEG, SCE).

Each node in the cluster has a minimum of six Ethernet NICs, where

Two NICs are used for the (private) heartbeat network

Two NICs are used for the (private) replication network

Two NICs are used for the public network (connectivity to SCEs and LEGs, and management of the SM).

The cluster has a virtual IP (VIP) address used for communication with the external entities. Each node in the cluster has also an IP address for administration of the node/cluster, as well as an IP address for replication use.

Upon failure of the primary NIC of the public network, there is a fail-over to the secondary NIC on the same node, keeping the same IP addresses (VIP1), with no fail-over of the cluster. Upon failure of the primary NIC of the replication or heartbeat networks, there is fail-over to the secondary NIC on the same node, keeping the same IP addresses (VIP2 and VIP3), with no fail-over of the cluster.

The following diagram illustrates the usage of the regular and virtual IP addresses used in cluster configuration:

Administration of the nodes uses IP1/IP2 and IP3/IP4 respectively.

The cluster IP address for external clients over the public network uses VIP1.

For further information about replication IP configuration, see Appendix E, Veritas Cluster Server Requirements and Configuration.

During normal operation, the Veritas Cluster Server mechanism automatically selects one of the SM servers to be active and the other to be standby.

The active SM server performs all the normal SM functionality. The two servers maintain the heartbeat mechanism between them, and the active server continuously replicates the subscriber database to the standby server’s database.

The standby SM server acts as a hot-standby machine, so it is completely ready for taking over (becoming activated) in a minimal fail-over time.

The following types of failures trigger the fail-over mechanism:

SM application failure, including failure of the TimesTen database.

Failure of the TimesTen daemon of the TimesTen replication process.

SUN server failure, due to failure of one of the resources of the server; for example, failure of both of the public network NICs.

Manual activation of fail-over.

Note

Communication failure does not cause a fail-over if there is a redundant NIC. Therefore, because each SUN machine has two NICs for connecting to external devices, a failure of one of the NICs merely causes switching to the redundant NIC, without activating the fail-over mechanism.

After detecting a failure, the standby SM becomes activated, and the following occurs:

The activated SM takes over the IP resources of the virtual IP mechanism.

The LEGs reconnect to the activated SM.

The activated SM creates IP connections with the SCEs and resynchronizes with them.

The activated SM starts processing information that is sent from the different LEGs and forwards it to the SCEs.

Different types of failures require different triggering for the recovery procedure. Some failures may recover automatically such as intra-node ports link-failure, which recovers automatically when the link revives, while others may need manual intervention.

Recovery may take place when an SM that experienced a failure is self-recovered or after it was replaced (if needed). The purpose of the recovery procedure is to take the cluster back to a fully functional mode. When the recovery procedure ends, the behavior is the same as it was after installation.

The failed SM server is recovered manually or automatically, according to the type of failure that occurred. The recovery procedures, and when they are used, are described in the following sections.

Recovering from a machine reboot is a fully automatic recovery process, where the failed SM server reboots, and after establishing a connection with the other server and synchronizing the databases, the cluster of the two SM servers is ready again for fail-over operation.

The following steps are automatic steps:

The reboot process is run on the node.

VCS makes the node standby.

The node boots.

VCS establishes intra-node communication and the new node joins the cluster.

The TimesTen database replication process is started from the point before the reboot.

The SM in the recovered server is ready after the database recovery process is running and the SM moves from Init state to Standby state.

Replacing the server is necessary when the machine has an unrecoverable physical failure. A new machine that is installed with fresh SM, TimesTen, and VCS installations replaces the server.

Replacing the server is a manual recovery, where the failed SM server is physically replaced. After connecting the new SM server to the network, configuring it and synchronizing the two databases, the cluster of the two SM servers is ready again for fail-over operation.

To manually replace the server:

Connect a new server to the inter-node ports and intra-node ports (but leave the network ports disconnected).

Basic network and cluster configurations—perform manually (the first time).

Copy the configuration files from the active node.

Use the following CLU command if you only need to copy the

p3sm.cfgfile:p3sm–-load-config –-remote=<NEW-SM_IP>.Perform the TimesTen database duplication operation. See Database Duplication Recovery.

Start the VCS operation on the recovered node.

Connect the network ports.

The SM in the recovered server is ready after the database recovery process is completed and the SM moves from Init state to Standby state.

Database duplication recovery is a manual recovery, which is needed when the standby node database loses synchronization with the active node database. Loss of synchronization can occur when one of the SM machines is replaced or when the replication process on the active node fails to replicate all of the data inserted to its database (replication NICs were disconnected).

To perform the database duplication recovery (in standby node):

Stop the cluster server (VCS) monitoring of the resources. Use the VCS CLU

hastop -localcommand to stop the VCS.Stop the SM, so it will not be affected by clearing the database. Use the CLU command

p3sm–-stop.Stop the replication agent. Use the CLU command

p3db–-rep-stop.Destroy the database. Use the CLU command

p3db–-destroy-rep-db.Duplicate the remote database to the local machine. Use the CLU command

p3db–-duplicate.Start the cluster server monitoring of the resources (use the VCS CLU

hastartcommand), which will automatically start the replication process and the SM.

The two SM servers are configured using Command-Line Utilities and a configuration file (see Chapter 4, Configuration and Management and Configuring a Subscriber Management Solution). The actual configuration is performed for the active SM and then manually replicated for the standby SM.

To perform configuration duplication:

Establish an FTP connection between the active and standby machines.

Copy the configuration file from

~pcube/sm/server/root/config/p3sm.cfgon the active node to the standby node, and apply the SM configuration file by using the CLU commandp3sm–-load-config.Alternatively, you can replicate the SM configuration file to the standby machine by running the CLU command

p3sm–-load-config -–remote=<NEW-SM_IP>on the active machine.Copy the application PQI file you installed on the active node to the standby node.

Install the PQI file. Use the CLU command

p3inst --install -f<PQI file path>.If you have made changes in the database-related configuration files, copy the files to

/etc/system(for Solaris) or to/etc/sysctl.conf(for Linux), and/var/TimesTen/sys.odbc.inifrom the active node to the standby node.

Note

If you perform Step 5 (copying files), a reboot of the standby node is required.

Note

If the database is located in different directories in the two nodes, then the files sys.odbc.ini in both nodes are not identical and the actual parameter changed in the file must be copied.

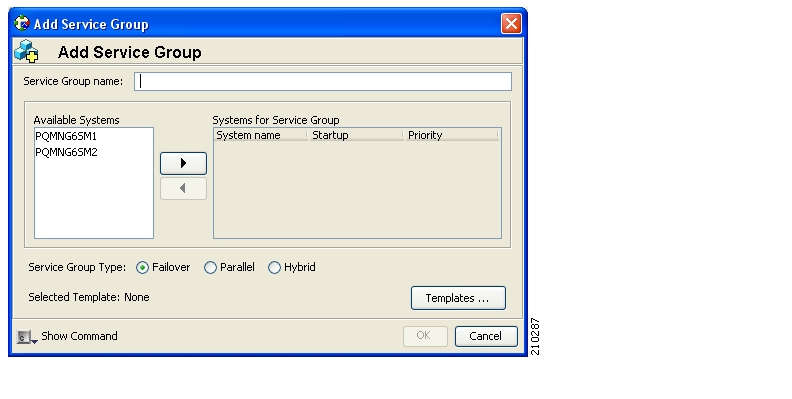

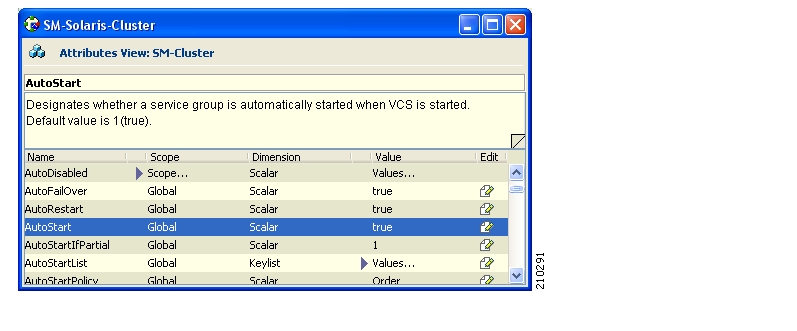

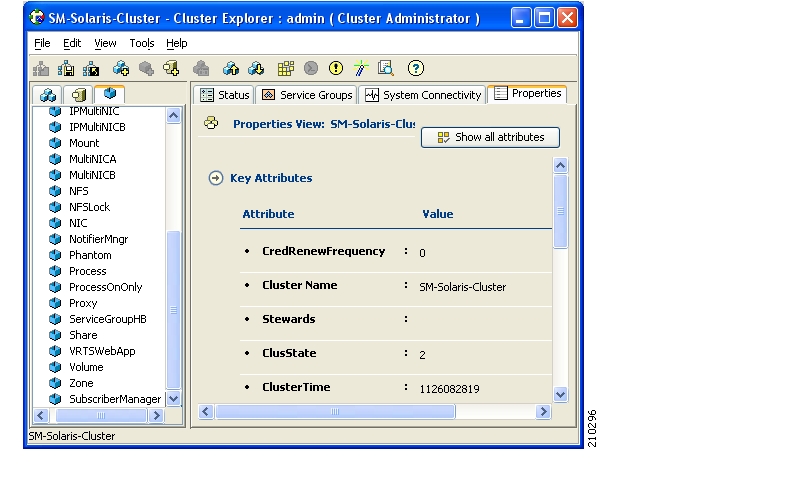

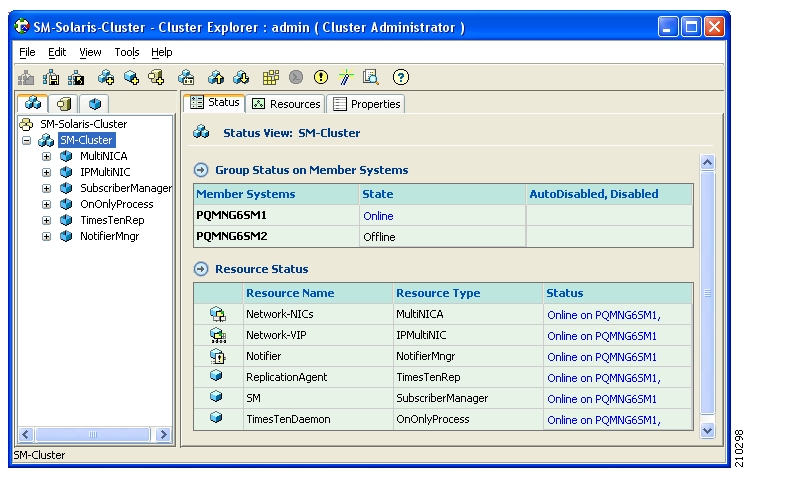

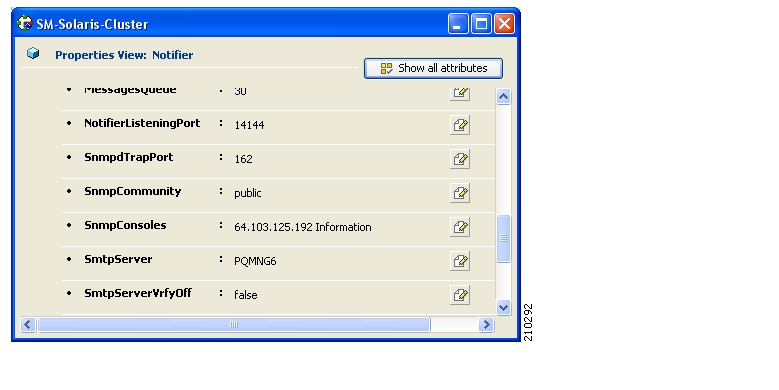

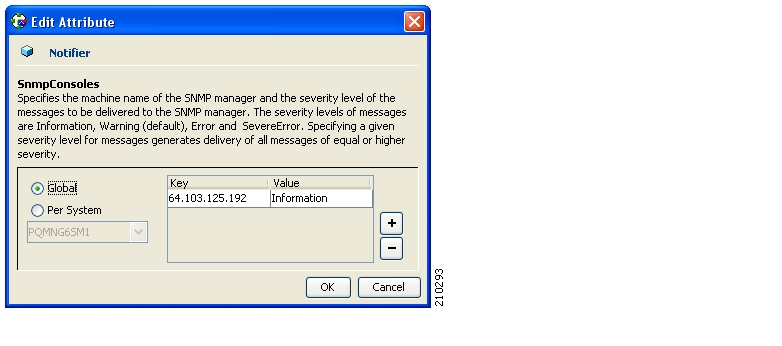

Configure and administer the Veritas Cluster Server using Veritas tools.

Notifications are enabled through SNMP traps that the Veritas Cluster Server provides. The Veritas Cluster Server supports SNMP traps such as:

Fatal failure detected (local or remote)

Secondary node starts fail-over procedure

Secondary node is operational (end of fail-over)

This chapter describes how to install the Cisco Service Control Management Suite Subscriber Manager (SCMS SM), in addition to how to upgrade and uninstall. This chapter also discusses topics related to installation, upgrading, and uninstalling.

Installing the SM is an automated process. It consists of executing an installation script residing on the root of the SM distribution files supplied by Cisco.

Note

For Solaris: The procedure also requires modifying the /etc/system file. Do this manually or use some other automated utility.

Note

For Linux: The procedure also requires modifying the /etc/sysctl.conf file. Do this manually or use some other automated utility.

The installation procedure installs the following components:

SM and Command-Line Utilities (CLU)

TimesTen database and DSN

Java Runtime Environment (JRE)

SM Veritas Cluster Agents

The installation procedure also includes:

Setting up a pcube user and group

Adding startup and shutdown scripts

System configuration for TimesTen (performed manually or using a script)

Replication scheme setting (performed by running a CLU). (Relevant only for cluster setups).

After completing installation and configuration, you can use the SM to introduce subscribers to the system.

The SCMS SM components are supplied in three distribution files:

SM for Solaris

SM for Linux

Login Event Generators (LEGs)

Each distribution file is supplied as a tar file, which is compressed by gzip and has an extension of .tar.gz. The following table lists the contents of the SM installation distribution files for Solaris and Linux.

Table 3.1. Contents of SM Distribution Files

|

Path |

Name |

Description |

|---|---|---|

|

DIST_ROOT |

|

Cross-platform files |

|

|

hooks.sh |

User-defined function for upgrade |

|

|

install |

Typical installation procedure description |

|

|

install-dsn.sh |

TimesTen DSN configuration script |

|

|

installjava.sh |

JRE installation script |

|

|

install-sm.sh |

SM installation script |

|

|

install-tt.sh |

TimesTen installation script |

|

|

install-vcs-agents.sh |

VCS agents installation script |

|

|

linux-def.sh |

Linux-specific definitions (only in the Linux distribution file) |

|

|

solaris-def.sh |

Solaris-specific definitions (only in the Solaris distribution file) |

|

|

MANIFEST |

CD information |

|

|

p3sm.sh |

Startup and shutdown script |

|

|

Prerequisites |

System minimal requirements list |

|

|

sm-common.sh |

General installation script |

|

|

sm-dist.tar.gz |

SM distribution |

|

|

tt-sysconf.sh |

TimesTen system configuration script |

|

|

uninstall-sm.sh |

SM uninstall script |

|

|

upgrade-sm.sh |

SM upgrade script |

|

|

vcs-agents-dist.tar.gz |

VCS agents distribution |

|

DIST_ROOT /Java/ |

|

Java Runtime Environment files |

|

|

j2re1.4.2_05-linux.tar.gz |

JRE for Linux (only in the Linux distribution file) |

|

|

j2re1.4.2_05-solaris.tar.gz |

JRE for Solaris (only in the Solaris distribution file) |

|

|

LICENSCE |

JRE license |

|

DIST_ROOT /TimesTen/ |

|

TimesTen files |

|

|

pqb_resp_uninst.txt |

Response file for TimesTen uninstall |

|

|

pqb-odbc-ini.txt |

Open Database Connectivity (ODBC) definitions |

|

|

pqb-response50.txt |

Response file for TimesTen installation |

|

|

pqb-sys-odbc-ini.txt |

Open Database Connectivity (ODBC) definitions |

|

|

TT5125LinuxRH32.tar.Z |

TimesTen for Linux (only in the Linux distribution file) |

|

|

TT5125Sparc64.tar.Z |

TimesTen for Solaris 64-bit (only in the Solaris distribution file) |

The following table lists the contents of the LEG distribution file:

Table 3.2. Contents of the LEG Distribution File

|

Path |

Name |

Description |

|---|---|---|

|

DIST_ROOT |

|

Cross-platform files |

|

|

MANIFEST |

Distribution information |

|

DIST_ROOT/bgp_leg |

|

Border Gateway Protocol (BGP) LEG files |

|

|

bgp_leg.tar.gz |

BGP LEG distribution |

|

|

Install |

LEG installation procedure description |

|

|

Install-bgp-leg.sh |

BGP LEG installation script |

|

|

linux-def.sh |

Linux-specific definitions |

|

|

sm-common.sh |

General installation script |

|

|

solaris-def.sh |

Solaris-specific definitions |

|

DIST_ROOT/cnr_leg |

|

Cisco Network Register (CNR) LEG files |

|

|

cnr-leg-dist.tar.gz |

CNR LEG distribution |

|

|

Install |

LEG installation procedure definitions |

|

DIST_ROOT/Lease_Query_Leg |

|

Lease Query LEG files |

|

|

dhcp_forwarder.tar.gz |

DHCP Forwarder distribution |

|

|

Install |

LEG installation procedure description |

|

|

install-forwarder.sh |

DHCP Forwarder installation script |

|

|

linux-def.sh |

Linux-specific definitions |

|

|

sm-common.sh |

General utility script |

|

|

solaris-def.sh |

Solaris-specific definitions |

|

DIST_ROOT/Lease_Query_LEG/sce |

|

Lease Query LEG SCE files |

|

|

leaseq.pqi |

DHCP Lease Query LEG distribution |

|

|

dhcp_pkg.cfg |

Default configuration file for package association |

|

DIST_ROOT/Lease_Query_LEG/sm |

|

Lease Query LEG SM files |

|

|

leaseq.pqi |

DHCP Lease Query LEG distribution |

|

DIST_ROOT/rdr_dhcp_leg |

|

SCE-Sniffer DHCP LEG files |

|

|

Install |

LEG installation procedure description |

|

|

rdrdhcp.pqi |

SCE-Sniffer DHCP LEG distribution |

|

DIST_ROOT/rdr_radius_leg |

|

SCE-Sniffer RADIUS LEG files |

|

|

Install |

LEG installation procedure distribution |

|

|

rdradius.pqi |

SCE-Sniffer RADIUS LEG distribution |

|

DIST_ROOT/sce_api |

|

SCE Subscriber API files |

|

|

readme |

API setup procedure description |

|

|

Sce-java-api-dist.tar.gz |

API distribution |

|

DIST_ROOT/sm_api |

|

SM API files |

|

|

readme |

API setup procedure description |

|

|

sm-c-api-dist.tar.gz |

C API distribution |

|

|

sm-java-api-dist.tar.gz |

Java API distribution |

The SM installation distribution file contains the following documents:

Manifest—Contains the version and build numbers for all components from which the distribution files were built

Install—The SCMS SM typical installation procedures

Prerequisites—Minimal system requirements for installation of the SM

You can install the SM on the following platforms:

Solaris—SUN SPARC machine running Solaris. See Table 3-3, Minimal System Hardware Requirements, and Table 3-4, Solaris Minimal System Software Requirements.

Linux—Machine with Intel-based processor running Linux. See Table 3-3, Minimal System Hardware Requirements, and Table 3-5, Red Hat Minimal System Software Requirements.

The machine should conform to the system requirements listed in the following tables.

Note

The specifications listed in Table 3-3 are minimal. They should be verified in order to guarantee specific performance and capacity requirements.

Table 3.3. Minimal System Hardware Requirements

|

Item |

Requirement |

|---|---|

|

CPU |

|

|

RAM |

Minimum 1 GB; see the RAM and Memory Configuration Parameters Versus Number of Subscribers table in Configuring the System Memory Settings. |

|

Free Disk Space |

Minimum 3 GB total, of which:

|

|

Network Interface |

Depends on whether or not the configuration includes a cluster:

|

|

CDROM drive |

Recommended |

Note

For the hardware and software system requirements for the Veritas Cluster Server, see Veritas Cluster Server System Requirements.

Table 3.4. Solaris System Software Requirements

|

Item |

Requirement |

|---|---|

|

OS |

Solaris 5.8 64-bit build 04/01 or later; currently, only 64-bit versions of Solaris 5.8 and 5.9 are supported. Solaris Core Installation |

|

System Packages |

Mandatory:

Optional:

|

Note

It is strongly recommended to apply the latest patches from SUN. You can download the latest patches from the SUN patches website.

Table 3.5. Red Hat System Software Requirements

|

Item |

Requirement |

|---|---|

|

OS |

Red Hat Enterprise Linux AS/ES 3.0/4.0; currently, only 32-bit versions are supported. Red Hat Core Installation |

|

System Packages |

Mandatory:

For integrating with the C API:

|

Note

It is strongly recommended to apply the latest patches from Red Hat.

All installations can be performed by executing one of the installation scripts located on the root of the SM distribution file.

You can choose to install the SM, TimesTen, and Java separately. In most cases, the SM installation script is the only script needed for completing the installation.

Each installation script displays messages describing the significant steps that are being performed. These messages are also sent to the system log for future reference. See Logging Script Messages for more information about the system log messages.

If you try to install the SM on a machine on which the SM is currently running, or to a directory in which the SM is already installed (even if not running), the operation will fail and you will be requested to upgrade the SM. See Upgrading.

This section assumes that you want to install the SM and TimesTen components, in addition to the Java Runtime Environment (JRE), and that you want to perform the necessary system configurations in order for these components to work.

There are seven steps for the installation, which are further described in the following sections:

Extracting the distribution files.

Executing the

install-sm.shscript. The root user must invoke this script.Setting a password for the user pcube.

Configuring the system memory settings.

Rebooting the computer.

Installing the SCA BB package and Login Event Generators

Add a user for PRPC authentication

Note

In a high availability setup (see SM Cluster), you must install the SM Cluster VCS agents. See Installing SM Cluster Agents.

Before you can install the SM, you must first load and extract the distribution files on the installed machine or in a directory that is mounted to the installed machine.

To extract the distribution files:

Download the distribution files from the Cisco web site.

Use FTP to load the distribution files to the SM.

Unzip the files using the following command:

gunzip SM_dist_3.0_B<build number>.tar.gzUn-tar the tar the file using the following command:

tar–xvf SM_dist_3.0_B<build number>.tar

Note

Before starting the installation, make sure that disk space requirements listed in the System Requirements are satisfied.

To execute the install-sm.sh script:

From your workstation shell prompt, move to the directory to where the distribution file was extracted and run the following command:

# install-sm.sh[-d install-directory]In this script, install-directory is the location in which you want to install the SM.

If you use the default install location,

/opt/pcube, you need not specify the d flag.For additional information about the script operation, see Installing the Subscriber Manager.

After the installation script has completed successfully, set the password for the pcube user by running the following command:

# passwd pcube

Note

It is important to remember the password you have selected.

Set the system memory configuration requirements according to the maximum number of subscribers. The following table lists the recommended memory configuration values based on the number of supported subscribers. The settings apply when the Quota Manager is disabled

Table 3.6. RAM and Memory Configuration Parameters Versus Number of Subscribers

|

Maximum Number of Subscribers |

Required RAM |

SM Process Memory Setting |

(TimesTen Memory Settings) Shared Memory |

(TimesTen Memory Settings) PermSize |

(TimesTen Memory Settings) TempSize |

|---|---|---|---|---|---|

|

100,000 |

1 GB |

256 MB |

512 MB |

200 MB |

100 MB |

|

500,000 |

2 GB |

512 MB |

1024 MB |

512 MB |

256 MB |

|

1,000,000 |

3 GB |

768 MB |

1280 MB |

768 MB |

256 MB |

|

2,000,000 |

4 GB |

1280 MB |

2048 MB |

1536 MB |

256 MB |

|

3,000,000 |

5 GB |

1792 MB |

2560 MB |

2048 MB |

256 MB |

|

4,000,000 |

6 GB |

2048 MB |

3328 MB |

2816 MB |

256 MB |

Note

The required RAM in the table is calculated for 40 SCE connections per SM. For each additional SCE you should add an additional 25 MB for the required RAM and SM process memory setting.

Description of the table columns:

Maximum Number of Subscribers—The maximum number of subscribers that the SM has to support. For additional information about the maximum number of subscribers configuration, see Configuring the Maximum Number of Subscribers.

Required RAM—The RAM requirement for the machine running the SM.

SM Process Memory Setting—The required memory configuration for the SM process itself. For additional information about the SM process memory configuration, see Configuring the SM Process Memory Settings.

The configuration required for TimesTen to run correctly. For additional information, see Configuring the System for TimesTen.

If the previous table does not list the maximum number of subscribers that you require, use the settings specified for the next higher value of Maximum Number of Subscribers. For example, for 1,200,000 subscribers, use the values specified for 2,000,000 subscribers (4 GB of RAM, and so on).

The following table lists the recommended memory configuration values based on the number of supported subscribers. The settings apply when the Quota Manager is enabled

Table 3.7. RAM and Memory Configuration Parameters Versus Number of Subscribers

|

Maximum Number of Subscribers |

Required RAM |

SM Process Memory Setting |

(TimesTen Memory Settings) Shared Memory |

(TimesTen Memory Settings) PermSize |

(TimesTen Memory Settings) TempSize |

|---|---|---|---|---|---|

|

500,000 |

3 GB |

512 MB |

1280 MB |

768 MB |

256 MB |

|

1,000,000 |

3 GB |

768 MB |

1792 MB |

1280 MB |

256 MB |

|

2,000,000 |

5 GB |

1280 MB |

3072 MB |

2560 MB |

256 MB |

|

3,000,000 |

7 GB |

1792 MB |

4096 MB |

3584 MB |

256 MB |

|

4,000,000 |

8 GB |

2048 MB |

5376 MB |

4864 MB |

256 MB |

Note

The required RAM in the table is calculated for 20 SCE connections per SM. For each additional SCE you should add an additional 50 MB for the required RAM and SM process memory setting.

There is a limit to the maximum number of subscribers that can be stored in the Subscriber Manager database. The limit is 4,000,000 subscribers for Solaris and 2,000,000 subscribers for Linux.

The Subscriber Manager default configuration supports a maximum of 800,000 subscribers.

To increase this number:

Add the following line to the

[Data Repository]section of thep3sm.cfgconfiguration file:max_number_of_subscribers=numberRestart the SM.

Note

In cluster setups, perform this on both SM machines.

TimesTen requires that certain changes be made in the system kernel configuration file (/etc/system in Solaris and /etc/sysctl.conf in Linux). These changes increase the shared memory and semaphore resources on the Solaris machine from their defaults. For additional information regarding these changes, refer to the TimesTen documentation.

Note

It is recommended that you review the /etc/system or the /etc/sysctl.conf file before running the tt-sysconf.sh script, because the script overwrites the current file settings with the values listed in the “Making the changes manually” procedure. If you want to keep some or all of the current file settings, edit the system configuration file and perform the changes manually.

TimesTen requires that certain changes be made in the operating system kernel configuration file:

For Solaris, modify file

/etc/system.For Linux, modify file

/etc/sysctl.conf.

These changes increase the shared memory and semaphore resources on the machine from their defaults.

Making the changes automatically:

Note

It is recommended that you review the system configuration file before running the

tt-sysconf.shscript, because the script overwrites the current file settings with the values listed in the “Making the changes manually” procedure. If you want to keep some or all of the current file settings, edit the configuration file by performing the changes manually.To make the required changes automatically, run the

tt-sysconf.shscript file. The root user must invoke this script file, without arguments, as follows:# tt-sysconf.sh

Making the changes manually:

Editing the configuration file manually is required when you require support for more than 100,000 subscribers in the SM. Your system's sizing requirements only affect the shared memory size. To determine the correct configuration values for your system, see the table in System Memory Settings.

For Solaris, make the required changes manually by adding the following lines to the

/etc/systemfile and configuring the shared memory size:*---- Begin settings for TimesTen set semsys:seminfo_semmni = 20 set semsys:seminfo_semmsl = 100 set semsys:seminfo_semmns = 2000 set semsys:seminfo_semmnu = 2000 set shmsys:shminfo_shmmax = 0x20000000 *---- End of settings for TimesTenFor Linux, make the required changes manually by adding the following lines to the

/etc/sysctl.conffile and configuring the shared memory size:*---- Begin settings for TimesTen kernel.shmmax = 536870912 kernel.sem = “SEMMSL_250 SEMMNS_32000 SEMOPM_100 SEMMNI_100 *---- End of settings for TimesTen

Some installations might require changing TimesTen parameters so that the database will run as desired. However, do not make any changes if the default values suit your requirements.

Setting the multi-processor optimization:

If your system is a multi-processor machine, the value of the

SMPOptLevelparameter of the Pcube_SM_Repository in thesys.odbc.inifile should be set to 1. Otherwise, it should be set to 0 or not set at all. The installation script automatically sets this parameter according to the number of available processors.

Setting the database size:

If your system needs to support more than 100,000 subscribers, set the values of parameters

PermSizeandTempSizeof the Pcube_SM_Repository in thesys.odbc.inifile. See System Memory Settings.For example:

PermSize=500 TempSize=150

Note

Solaris—Remember to set the value of parameter shmsys:shminfo_shmmax in the /etc/system file to be larger than the sum of PermSize and TempSize.

Note

Red Hat—Remember to set the value of parameter kernel.shmmax in the /etc/sysctl.conf file to be larger than the sum of PermSize and TempSize.

By default, the SM process uses 256 MB of RAM memory. However, in certain application component configurations, the SM process needs to allocate additional memory to work correctly. Setting an environment variable called PCUBE_SM_MEM_SIZE with the desired memory size (in megabytes) instructs the SM start-up scripts to allocate the defined memory size for the SM process.

You can set the memory size value for this environment variable for the user pcube, or you can configure the desired process memory size in the sm.sh file located in the root directory of the user pcube (~pcube/sm.sh).

The following example, which shows a line in the sm.sh file, defines a memory size of 512 MB for the SM process:

PCUBE_SM_MEM_SIZE=512

Note

To prevent performance degradation because of memory swapping, make sure that the machine has enough RAM for the SM process, the SM database, and all of the other applications running on this machine.

Note

To determine the correct memory values for your installation, see System Memory Settings Versus.

Depending on the integration type, you might need to install the SCA BB package on the SM or install Login Event Generator (LEG) modules.

To perform the installation, use the p3inst command-line utility. For example:

> p3inst -–install –-file=eng30.pqi

For additional information, see Installing an Application.

It is necessary to add a user for PRPC authentication because the SCA BB application requires a username and password when connecting to the SM.

To add a user for PRPC authentication, use the p3rpc command-line utility. For example:

> p3rpc -–set-user --username=<username> --password=<password>

To verify that the installation was successful, run a CLU utility, such as the p3sm command that displays general information about the SM.

To verify that the SM installation was successful:

From your workstation shell prompt, change to the

~pcube/sm/server/bindirectory, and type:> p3sm --sm-statusThe above command displays the current status of the SM.

Note

Wait a few minutes after the installation before running this command to allow the SM to become operational.

The output of this command should indicate that the SM is running.

In case of errors during installation, the command will output a description of these errors.

After installing the SM, you can configure the SM to your specific needs. In particular, you should address the following parameters at this point:

topology—Cluster or standalone

introduction_mode—Pull or push

support_ip_ranges—Whether IP-ranges should be used in the installed setup

To configure the SM, edit its configuration file, p3sm.cfg, using any standard text editor. The configuration file is described in detail in Chapter4, Configuration and Management, and in Appendix A, Configuration File Options. After you finish editing the p3sm.cfg configuration file, use the p3sm utility for updating the SM with the new settings:

The following procedures complement the ones described in Typical Installation:

Installing an SM Cluster—Should be used if installing two SM nodes for the first time.

Installing the Subscriber Manager—Should be used if installing the SM database to a location other that the default, or if the user pcube is already defined in the system.

Installing SM Cluster Agents—Must be installed when in a High Availability setup where Veritas Cluster Server (VCS) is used.

The installation of an SM cluster is very similar to installing the SM on two machines.

To install an SM Cluster:

Before installing the SM cluster, you must first install the Veritas Cluster Server software on both nodes.

Install the SM on both machines, as described in Installing the Subscriber Manager.

Configure the SM topology parameter to the cluster, as described in Configuring the Subscriber Manager.

Configure the replication scheme for the data-store replication to the redundant machine.

Run the CLU:

p3db --set-rep-scheme.Install the SM VCS agents, as described in Installing SM Cluster Agents.

Configure the VCS, as described in Appendix E, Veritas Cluster Server Requirements and Configuration.

Note

This installation is customizable.

To execute the install-sm.sh script:

From your workstation shell prompt, enter the following command:

# install-sm.sh[command options]

The following table lists the command options.

Table 3.8. Options for install-sm.sh

|

Options |

Description |

|---|---|

|

-d |

Specifies the install directory for ~pcube. This directory must not be an existing directory. This directory must be specified as a full pathname that begins with “/”. Default: /opt/pcube |

|

-o |

Specifies the existing home of user pcube as the install directory. Note that the options –d and –o are mutually exclusive. |

|

-v |

Specifies the directory for data storage. This directory must not be an existing directory. This directory must be on a partition with at least 1 GB of free space. This directory must be specified as a full pathname that begins with “/”. Default: InstallDirectory/var |

|

-h |

Prints a help message and exits. |

The script performs the following steps:

Checks for validity of arguments and sufficient disk space.

Adds (or verifies the existence of) a user pcube and a group pcube.

Populates the pcube home directory with SM and CLU directory structure.

Invokes the JRE installation script with pcube home as the target directory. The JRE installation does not affect any existing Java installations.

Invokes the TimesTen installation script with pcube home as the target directory.

Creates the SM DSN for TimesTen with pcube home as the target directory. It is possible to install the SM DSN for TimesTen in a specified directory by using the -v option.

Creates startup and shutdown scripts in

/etc.Creates the shell preamble

~pcube/sm.sh, which contains environment variables that depend on the actual folder in which the SM was installed.

Example:

The following example installs the SM and CLU to a directory named /usr/local/pcube, using the default data storage directory definition.

# install-sm.sh –d /usr/local/pcube

The installation distribution file contains a set of customized Veritas Cluster Agents for supporting monitoring and controlling of SM-related resources in cluster topology. You must install the cluster agents under the VCS bin directory.

To install VCS agents:

From your workstation shell prompt, type:

# install-vcs-agents.sh[command-options]

The following table lists the command options.

Table 3.9. Options for install-vcs-agents.sh

|

Options |

Description |

|---|---|

|

-d |

Specifies the installation directory for the agents, which must be the This directory must be an existing directory. This directory must be specified as a full pathname that begins with ‘/’. Default: |

|

-h |

Prints a help message and exits. |

The script performs the following steps:

Checks that the installation directory exists.

Extracts the agent distribution file to the specified directory.

Copies the VCS default-script-agent-executable from the installation directory to all agent directories.

For troubleshooting the installation, see Appendix D, Troubleshooting.

An application can be installed on the SM in order to customize the components. You can also upgrade an existing application to a new version, or return to a previous version (rollback) of an application. Use the p3inst utility to install or uninstall an application (PQI file).

Note

You must run the p3inst utility as user pcube. The script is located in the ~pcube/sm/server/bin directory.

For additional details of how to install a specific application such as SCA BB, refer to the application installation guide.

To install or uninstall an application:

From your workstation shell prompt, enter the following command with the appropriate parameters:

> p3instoperation filename [installation/upgrade parameters]

The following table lists the p3inst operations.

Table 3.10. p3inst Operations

|

Option |

Description |

|---|---|

|

--install |

Installs the specified application PQI file to the SM. It may be necessary to specify arguments for the installation procedure in the command line. |

|

--uninstall |

Uninstalls the specified application from the SM. |

|

--upgrade |

Upgrades the specified application. It may be necessary to specify arguments for the upgrade procedure in the command line. |

|

--rollback |

Returns the specified application to the previous version. |

|

--describe |

Displays the contents of the specified application file. |

|

--show-last |

Lists the last installed PQI file |

Example 1:

The following example shows how to install the specified installation file to the device.

> p3inst --install --file=eng216.pqi

Example 2:

The following example shows how to uninstall the specified installation file from the device.

> p3inst --uninstall –-file=oldInstallation.pqi

This section describes the system changes applied automatically by the SM installation. The SM installation adds a dedicated user and group, and startup and shutdown scripts.

Script messages are logged into the system log in the following manner:

For Solaris—The installation scripts log all their messages into the system log, which is usually the file located at

/var/adm/messages. The messages are logged to theuser.infosyslog category.For Linux—The installation scripts log all their messages into the system log, which is usually the file located at

/var/log/messages. The messages are logged to theuser.infosyslog category.

During installation, a user named pcube is created (unless it already exists) with its own group. This user owns all installed SM and CLU files. The user home directory is the installation directory selected during installation. For security purposes, the user is initially created with a locked password. You must assign a new password.

The SM is started on boot to run level 2, and is stopped when leaving this run level (for example, when the machine is shut down).

The installer installs the following files for startup and shutdown:

For Solaris:

-rwxr--r-- 1 root other /etc/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc0.d/K44p3sm -> /etc/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc1.d/K44p3sm -> /etc/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc2.d/S92p3sm -> /etc/init.d/p3sm lrwxrwxrwx 1 root other /etc/rcS.d/K44p3sm -> /etc/init.d/p3smFor Linux:

-rwxr--r-- 1 root other /etc/rc.d/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc.d/rc0.d/K44p3sm -> /etc/rc.d/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc.d/rc1.d/K44p3sm -> /etc/rc.d/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc.d/rc2.d/S92p3sm -> /etc/rc.d/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc.d/rc3.d/S92p3sm -> /etc/rc.d/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc.d/rc5.d/S92p3sm -> /etc/rc.d/init.d/p3sm lrwxrwxrwx 1 root other /etc/rc.d/rc6.d/K44p3sm -> /etc/rc.d/init.d/p3sm

The TimesTen installer creates similar startup and shutdown scripts.

Subscriber Manager version 3.x supports several types of upgrade procedures, according to the SM version that was previously installed and the requirement (or lack of requirement) for fail-over in the new installation.

In some topologies, such as cluster, the upgrade procedure does not consist of running a script, because it is required to have minimum down time.

The following sections describe the various procedures needed for upgrading in different topologies and for moving from one topology to another.

To upgrade the Subscriber Manager:

Extract the distribution files as described in Step 1: Extracting the Distribution Files.

Login as the root user.

If upgrading from version 2.x and using a cluster setup, uninstall VCS agents as described in Uninstalling VCS agents. The resource names to use are PcubeSm, OnOnlyProcess, and TimesTenRep.

If using a cluster setup, stop the VCS monitoring of the SM, by running the following VCS CLU command from

/opt/VRTSvcs/bin:#./hastop–localIf upgrading from version 2.x, disable the state exchange between the SM and the SCE platform by editing the SM configuration file (

p3sm.cfg) and setsave_subscriber_state=false, then load the configuration file using the following command:Note

You must use this CLU as user pcube.

> p3sm --load-configRun the

upgrade-sm.shscript from the distribution root directory.If upgrading from version 2.x, drop the old replication scheme and restart the SM.

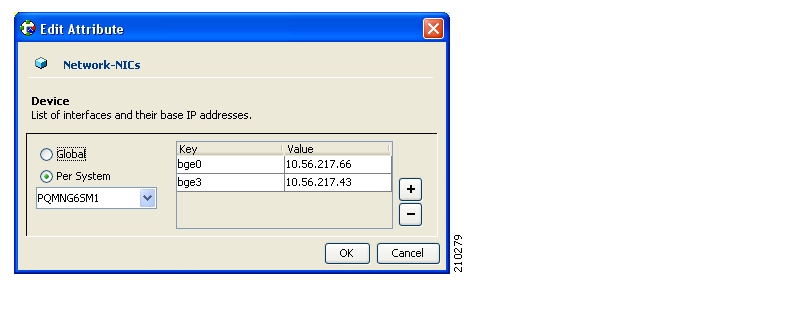

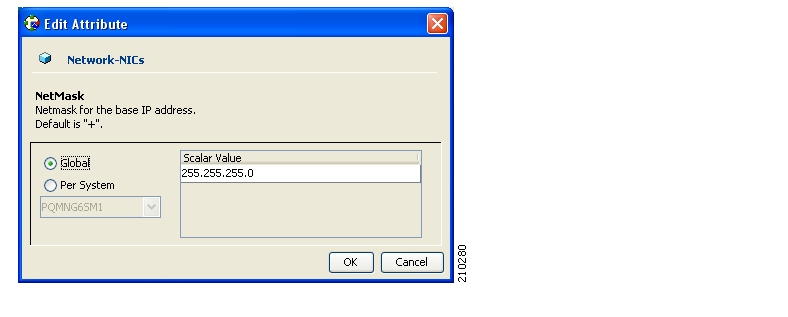

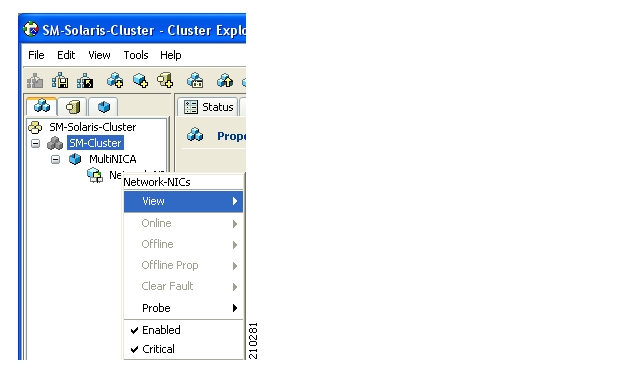

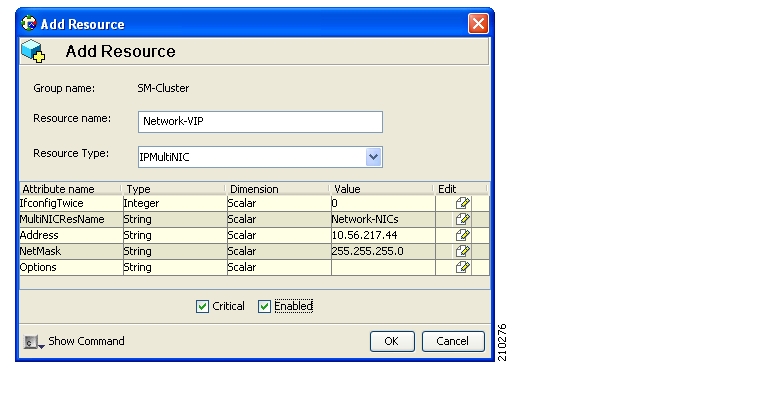

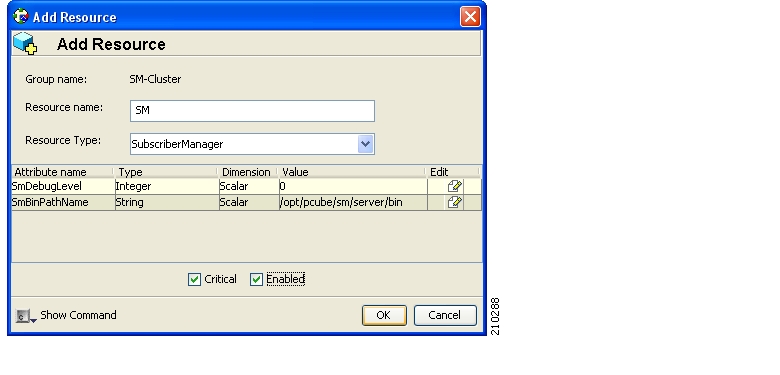

Note