data can be held until processed. No attempt is made to actually slow the transmission rate of

the sender of the data. In fact, buffering is such a common method of dealing with changes in

the rate of arrival of data that most of us would probably just assume that it is happening.

However, some older documentation refers to "three methods of flow control," of which

buffering is one of the methods, so be sure to remember it as a separate function.

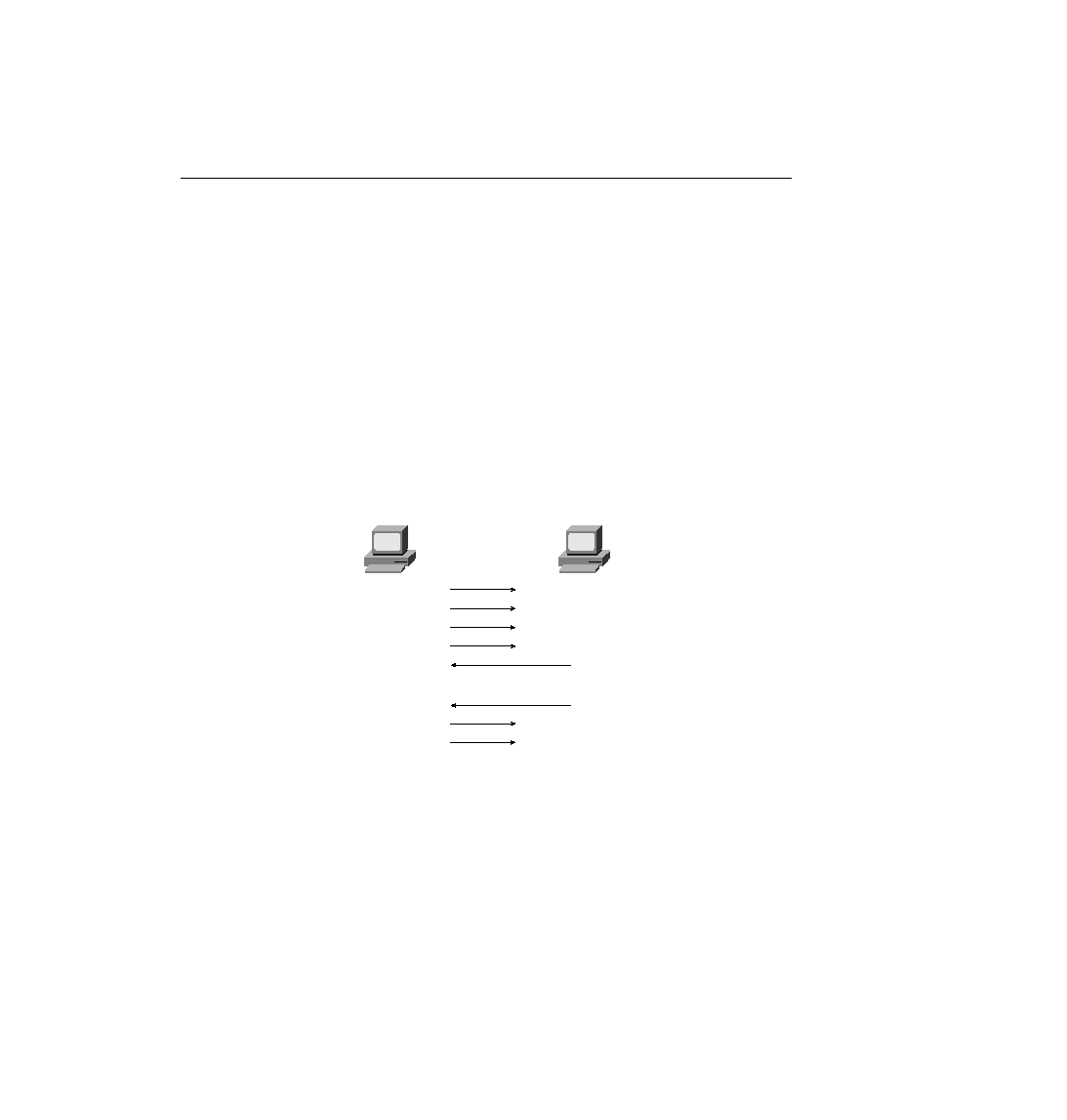

receiving the data notices that its buffers are filling. This causes either a separate PDU, or field

in a header, to be sent toward the sender, signaling the sender to stop transmitting. Figure 3-9

shows an example.

avoidance example. This process is used by Synchronous Data Link Control (SDLC) and Link

Access Procedure, Balanced (LAPB) serial data link protocols.

altogether. This method would still be considered congestion avoidance, but instead of

signaling the sender to stop, the signal would mean to slow down. One example is the TCP/IP

Internet Control Message Protocol (ICMP) message "Source Quench." This message is sent by

the receiver or some intermediate router to slow the sender. The sender can slow down gradually

until "Source Quench" messages are no longer received.

.

.

.

.